Fission and Fusion

- by Richard M J Renneboog and ScienceIQ.com

In the nuclear fission process, a heavy atomic nucleus spontaneously splits apart, releasing energy and an energetic particle, and forms two smaller atomic nuclei. While this is a normal, natural process, it is in actuality an extremely rare process. Vastly more common is the opposite process of 'fusion', in which two very light atomic nuclei fuse together to form a heavier atomic nucleus. Every star in the universe works on this principle.

In the nuclear fission process, a heavy atomic nucleus spontaneously splits apart, releasing energy and an energetic particle, and forms two smaller atomic nuclei. While this is a normal, natural process, it is in actuality an extremely rare process. Vastly more common is the opposite process of 'fusion', in which two very light atomic nuclei fuse together to form a heavier atomic nucleus. Every star in the universe works on this principle.

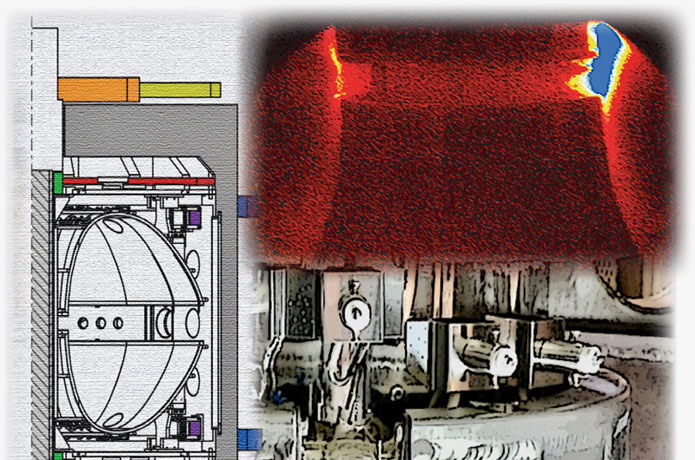

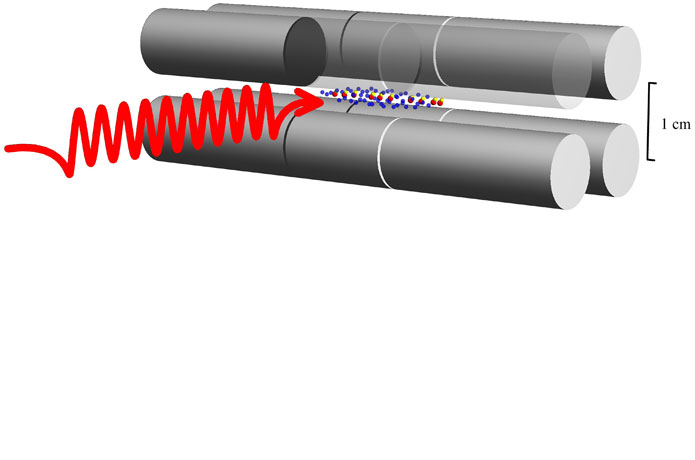

In the nuclear fusion process, the product formed is a helium nucleus consisting of two protons and two neutrons. Ironically, this is the same particle emitted by many radioactive materials when they decay. To form the helium nucleus through fusion requires the joining of two deuterium nuclei. Deuterium is an isotopic form of hydrogen in which each nucleus contains both a proton and a neutron rather than just the one proton of the normal hydrogen nucleus. A single helium nucleus represents a large energy difference relative to two separate deuterium nuclei, and as one might expect, a large amount of energy is released when nuclear fusion occurs. But there is also a very large energy barrier to be overcome in order to bring the deuterium nuclei together and make them fuse. Think of it as a switch that you have to hit with a very heavy hammer in order to get the lights to come one. In this case, the 'hammer' is an atomic bomb!

To trigger the nuclear fusion reaction that is the heart of the 'hydrogen bomb' requires the deuterium mass to be impacted by an explosive force equivalent to that of a conventional atomic bomb based on nuclear fission. The result is catastrophic.