Wire Maze Electricity DIY STEM Kit

$9.99$5.55

Posted on: Jun 15, 2018

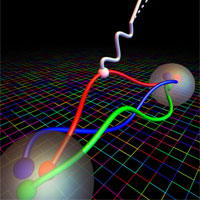

In this illustration, the grid in the background represents the computational lattice that theoretical physicists used to calculate a particle property known as nucleon axial coupling. This property determines how a W boson (white wavy line) interacts with one of the quarks in a neutron (large transparent sphere in foreground), emitting an electron (large arrow) and antineutrino (dotted arrow) in a process called beta decay. This process transforms the neutron into a proton (distant transparent sphere). (Credit: Evan Berkowitz/Jülich Research Center, Lawrence Livermore National Laboratory)

Experiments that measure the lifetime of neutrons reveal a perplexing and unresolved discrepancy. While this lifetime has been measured to a precision within 1 percent using different techniques, apparent conflicts in the measurements offer the exciting possibility of learning about as-yet undiscovered physics.

Now, a team led by scientists in the Nuclear Science Division at the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) has enlisted powerful supercomputers to calculate a quantity known as the “nucleon axial coupling,” or gA – which is central to our understanding of a neutron’s lifetime – with an unprecedented precision. Their method offers a clear path to further improvements that may help to resolve the experimental discrepancy.

To achieve their results, the researchers created a microscopic slice of a simulated universe to provide a window into the subatomic world. Their study was published online May 30 in the journal Nature.

The nucleon axial coupling is more exactly defined as the strength at which one component (known as the axial component) of the “weak current” of the Standard Model of particle physics couples to the neutron. The weak current is given by one of the four known fundamental forces of the universe and is responsible for radioactive beta decay – the process by which a neutron decays to a proton, an electron, and a neutrino.

In addition to measurements of the neutron lifetime, precise measurements of neutron beta decay are also used to probe new physics beyond the Standard Model. Nuclear physicists seek to resolve the lifetime discrepancy and augment with experimental results by determining gA more precisely.

The researchers turned to quantum chromodynamics (QCD), a cornerstone of the Standard Model that describes how quarks and gluons interact with each other. Quarks and gluons are the fundamental building blocks for larger particles, such as neutrons and protons. The dynamics of these interactions determine the mass of the neutron and proton, and also the value of gA.

But sorting through QCD’s inherent complexity to produce these quantities requires the aid of massive supercomputers. In the latest study, researchers applied a numeric simulation known as lattice QCD, which represents QCD on a finite grid.

While a type of mirror-flip symmetry in particle interactions called parity (like swapping your right and left hands) is respected by the interactions of QCD, and the axial component of the weak current flips parity – parity is not respected by nature (analogously, most of us are right-handed). And because nature breaks this symmetry, the value of gA can only be determined through experimental measurements or theoretical predictions with lattice QCD.

The team’s new theoretical determination of gA is based on a simulation of a tiny piece of the universe – the size of a few neutrons in each direction. They simulated a neutron transitioning to a proton inside this tiny section of the universe, in order to predict what happens in nature.

The model universe contains one neutron amid a sea of quark-antiquark pairs that are bustling under the surface of the apparent emptiness of free space.

“Calculating gA was supposed to be one of the simple benchmark calculations that could be used to demonstrate that lattice QCD can be utilized for basic nuclear physics research, and for precision tests that look for new physics in nuclear physics backgrounds,” said André Walker-Loud, a staff scientist in Berkeley Lab’s Nuclear Science Division who led the new study. “It turned out to be an exceptionally difficult quantity to determine.”

This is because lattice QCD calculations are complicated by exceptionally noisy statistical results that had thwarted major progress in reducing uncertainties in previous gA calculations. Some researchers had previously estimated that it would require the next generation of the nation’s most advanced supercomputers to achieve a 2 percent precision for gA by around 2020.

The team participating in the latest study developed a way to improve their calculations of gA using an unconventional approach and supercomputers at Oak Ridge National Laboratory (Oak Ridge Lab) and Lawrence Livermore National Laboratory (Livermore Lab). The study involved scientists from more than a dozen institutions, including researchers from UC Berkeley and several other Department of Energy national labs.

Chia Cheng “Jason” Chang, the lead author of the publication and a postdoctoral researcher in Berkeley Lab’s Nuclear Science Division for the duration of this work, said, “Past calculations were all performed amidst this more noisy environment,” which clouded the results they were seeking. Chang has also joined the Interdisciplinary Theoretical and Mathematical Sciences Program at RIKEN in Japan as a research scientist.

Walker-Loud added, “We found a way to extract gA earlier in time, before the noise ‘explodes’ in your face.”

Chang said, “We now have a purely theoretical prediction of the lifetime of the neutron, and it is the first time we can predict the lifetime of the neutron to be consistent with experiments.”

“This was an intense 2 1/2-year project that only came together because of the great team of people working on it,” Walker-Loud said.

This latest calculation also places tighter constraints on a branch of physics theories that stretch beyond the Standard Model – constraints that exceed those set by powerful particle collider experiments at CERN’s Large Hadron Collider. But the calculations aren’t yet precise enough to determine if new physics have been hiding in the gA and neutron lifetime measurements.

Chang and Walker-Loud noted that the main limitation to improving upon the precision of their calculations is in supplying more computing power.

“We don’t have to change the technique we’re using to get the precision necessary,” Walker-Loud said.

The latest work builds upon decades of research and computational resources by the lattice QCD community. In particular, the research team relied upon QCD data generated by the MILC Collaboration; an open source software library for lattice QCD called Chroma, developed by the USQCD collaboration; and QUDA, a highly optimized open source software library for lattice QCD calculations.

The team drew heavily upon the power of Titan, a supercomputer at Oak Ridge Lab equipped with graphics processing units, or GPUs, in addition to more conventional central processing units, or CPUs. GPUs have evolved from their early use in accelerating video game graphics to current applications in evaluating large arrays for tackling complicated algorithms pertinent to many fields of science.

The axial coupling calculations used about 184 million “Titan hours” of computing power – it would take a single laptop computer with a large memory about 600,000 years to complete the same calculations.

As the researchers worked through their analysis of this massive set of numerical data, they realized that more refinements were needed to reduce the uncertainty in their calculations.

The team was assisted by the Oak Ridge Leadership Computing Facility staff to efficiently utilize their 64 million Titan-hour allocation, and they also turned to the Multiprogrammatic and Institutional Computing program at Livermore Lab, which gave them more computing time to resolve their calculations and reduce their uncertainty margin to just under 1 percent.

“Establishing a new way to calculate gA has been a huge rollercoaster,” Walker-Loud said.

With more statistics from more powerful supercomputers, the research team hopes to drive the uncertainty margin down to about 0.3 percent. “That’s where we can actually begin to discriminate between the results from the two different experimental methods of measuring the neutron lifetime,” Chang said. “That’s always the most exciting part: When the theory has something to say about the experiment.”

He added, “With improvements, we hope that we can calculate things that are difficult or even impossible to measure in experiments.”

Already, the team has applied for time on a next-generation supercomputer at Oak Ridge Lab called Summit, which would greatly speed up the calculations.

In addition to researchers at Berkeley Lab and UC Berkeley, the science team also included researchers from University of North Carolina, RIKEN BNL Research Center at Brookhaven National Laboratory, Lawrence Livermore National Laboratory, the Jülich Research Center in Germany, the University of Liverpool in the U.K., the College of William & Mary, Rutgers University, the University of Washington, the University of Glasgow in the U.K., NVIDIA Corp., and Thomas Jefferson National Accelerator Facility.

One of the study participants is a scientist at the National Energy Research Scientific Computing Center (NERSC). The Titan supercomputer is a part of the Oak Ridge Leadership Computing Facility (OLCF). NERSC and OLCF are DOE Office of Science User Facilities.

The work was supported by Laboratory Directed Research and Development programs at Berkeley Lab, the U.S. Department of Energy’s Office of Science, the Nuclear Physics Double Beta Decay Topical Collaboration, the DOE Early Career Award Program, the NVIDIA Corporation, the Joint Sino-German Research Projects of the German Research Foundation and National Natural Science Foundation of China, RIKEN in Japan, the Leverhulme Trust, the National Science Foundation’s Kavli Institute for Theoretical Physics, DOE’s Innovative and Novel Computational Impact on Theory and Experiment (INCITE) program, and the Lawrence Livermore National Laboratory Multiprogrammatic and Institutional Computing program through a Tier 1 Grand Challenge award.

'Physicists like to think that all you have to do is say, these are the conditions, now what happens next?'

'Physicists like to think that all you have to do is say, these are the conditions, now what happens next?'